- According To Operant Conditioning What Is A Slot Machine An Example Offering

- According To Operant Conditioning What Is A Slot Machine An Example Often

- According To Operant Conditioning What Is A Slot Machine An Example Of One

- According To Operant Conditioning What Is A Slot Machine An Example Offer

Learning Objectives

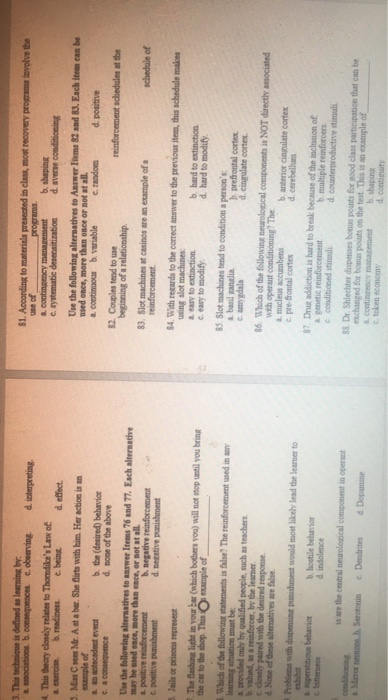

- Distinguish between reinforcement schedules

Slot machines, in particular, are of a variable ratio schedule because people will spend hours sitting there pulling the lever in hopes to score big. In operant conditioning, a variable-ratio schedule is a schedule of reinforcement where a response is reinforced after an unpredictable number of responses.

Remember, the best way to teach a person or animal a behavior is to use positive reinforcement. For example, Skinner used positive reinforcement to teach rats to press a lever in a Skinner box. At first, the rat might randomly hit the lever while exploring the box, and out would come a pellet of food. After eating the pellet, what do you think the hungry rat did next? It hit the lever again, and received another pellet of food. Each time the rat hit the lever, a pellet of food came out. When an organism receives a reinforcer each time it displays a behavior, it is called continuous reinforcement. This reinforcement schedule is the quickest way to teach someone a behavior, and it is especially effective in training a new behavior. Let’s look back at the dog that was learning to sit earlier in the module. Now, each time he sits, you give him a treat. Timing is important here: you will be most successful if you present the reinforcer immediately after he sits, so that he can make an association between the target behavior (sitting) and the consequence (getting a treat).

- Operant conditioning is useful in education and work environments, as well as for people wanting to form or change a habit, and, as you have already read, to train animals. Any environment where the desire is to modify or shape behavior is a good fit.

- Skinner developed operant conditioning for systematic study of how behaviors are strengthened or weakened according to their consequences. (b) In a Skinner box, a rat presses a lever in an operant conditioning chamber to receive a food reward. (credit a: modification of work by “Silly rabbit”/Wikimedia Commons).

, also referred to as intermittent reinforcement, the person or animal does not get reinforced every time they perform the desired behavior. There are several different types of partial reinforcement schedules (Table 1). These schedules are described as either fixed or variable, and as either interval or ratio. Fixed refers to the number of responses between reinforcements, or the amount of time between reinforcements, which is set and unchanging. Variable

, also referred to as intermittent reinforcement, the person or animal does not get reinforced every time they perform the desired behavior. There are several different types of partial reinforcement schedules (Table 1). These schedules are described as either fixed or variable, and as either interval or ratio. Fixed refers to the number of responses between reinforcements, or the amount of time between reinforcements, which is set and unchanging. Variable refers to the number of responses or amount of time between reinforcements, which varies or changes. Interval means the schedule is based on the time between reinforcements, and ratio means the schedule is based on the number of responses between reinforcements.

refers to the number of responses or amount of time between reinforcements, which varies or changes. Interval means the schedule is based on the time between reinforcements, and ratio means the schedule is based on the number of responses between reinforcements.| Reinforcement Schedule | Description | Result | Example |

|---|---|---|---|

| Fixed interval | Reinforcement is delivered at predictable time intervals (e.g., after 5, 10, 15, and 20 minutes). | Moderate response rate with significant pauses after reinforcement | Hospital patient uses patient-controlled, doctor-timed pain relief |

| Variable interval | Reinforcement is delivered at unpredictable time intervals (e.g., after 5, 7, 10, and 20 minutes). | Moderate yet steady response rate | Checking Facebook |

| Fixed ratio | Reinforcement is delivered after a predictable number of responses (e.g., after 2, 4, 6, and 8 responses). | High response rate with pauses after reinforcement | Piecework—factory worker getting paid for every x number of items manufactured |

| Variable ratio | Reinforcement is delivered after an unpredictable number of responses (e.g., after 1, 4, 5, and 9 responses). | High and steady response rate | Gambling |

Now let’s combine these four terms. A fixed interval reinforcement schedule is when behavior is rewarded after a set amount of time. For example, June undergoes major surgery in a hospital. During recovery, she is expected to experience pain and will require prescription medications for pain relief. June is given an IV drip with a patient-controlled painkiller. Her doctor sets a limit: one dose per hour. June pushes a button when pain becomes difficult, and she receives a dose of medication. Since the reward (pain relief) only occurs on a fixed interval, there is no point in exhibiting the behavior when it will not be rewarded.

With a variable interval reinforcement schedule, the person or animal gets the reinforcement based on varying amounts of time, which are unpredictable. Say that Manuel is the manager at a fast-food restaurant. Every once in a while someone from the quality control division comes to Manuel’s restaurant. If the restaurant is clean and the service is fast, everyone on that shift earns a $20 bonus. Manuel never knows when the quality control person will show up, so he always tries to keep the restaurant clean and ensures that his employees provide prompt and courteous service. His productivity regarding prompt service and keeping a clean restaurant are steady because he wants his crew to earn the bonus.

With a fixed ratio reinforcement schedule, there are a set number of responses that must occur before the behavior is rewarded. Carla sells glasses at an eyeglass store, and she earns a commission every time she sells a pair of glasses. She always tries to sell people more pairs of glasses, including prescription sunglasses or a backup pair, so she can increase her commission. She does not care if the person really needs the prescription sunglasses, Carla just wants her bonus. The quality of what Carla sells does not matter because her commission is not based on quality; it’s only based on the number of pairs sold. This distinction in the quality of performance can help determine which reinforcement method is most appropriate for a particular situation. Fixed ratios are better suited to optimize the quantity of output, whereas a fixed interval, in which the reward is not quantity based, can lead to a higher quality of output.

In a variable ratio reinforcement schedule, the number of responses needed for a reward varies. This is the most powerful partial reinforcement schedule. An example of the variable ratio reinforcement schedule is gambling. Imagine that Sarah—generally a smart, thrifty woman—visits Las Vegas for the first time. She is not a gambler, but out of curiosity she puts a quarter into the slot machine, and then another, and another. Nothing happens. Two dollars in quarters later, her curiosity is fading, and she is just about to quit. But then, the machine lights up, bells go off, and Sarah gets 50 quarters back. That’s more like it! Sarah gets back to inserting quarters with renewed interest, and a few minutes later she has used up all her gains and is $10 in the hole. Now might be a sensible time to quit. And yet, she keeps putting money into the slot machine because she never knows when the next reinforcement is coming. She keeps thinking that with the next quarter she could win $50, or $100, or even more. Because the reinforcement schedule in most types of gambling has a variable ratio schedule, people keep trying and hoping that the next time they will win big. This is one of the reasons that gambling is so addictive—and so resistant to extinction.

Watch It

Review the schedules of reinforcement in the following video.

In operant conditioning, extinction of a reinforced behavior occurs at some point after reinforcement stops, and the speed at which this happens depends on the reinforcement schedule. In a variable ratio schedule, the point of extinction comes very slowly, as described above. But in the other reinforcement schedules, extinction may come quickly. For example, if June presses the button for the pain relief medication before the allotted time her doctor has approved, no medication is administered. She is on a fixed interval reinforcement schedule (dosed hourly), so extinction occurs quickly when reinforcement doesn’t come at the expected time. Among the reinforcement schedules, variable ratio is the most productive and the most resistant to extinction. Fixed interval is the least productive and the easiest to extinguish (Figure 1).

Connect the Concepts: Gambling and the Brain

Skinner (1953) stated, “If the gambling establishment cannot persuade a patron to turn over money with no return, it may achieve the same effect by returning part of the patron’s money on a variable-ratio schedule” (p. 397).

Figure 2. Some research suggests that pathological gamblers use gambling to compensate for abnormally low levels of the hormone norepinephrine, which is associated with stress and is secreted in moments of arousal and thrill. (credit: Ted Murphy)

Skinner uses gambling as an example of the power and effectiveness of conditioning behavior based on a variable ratio reinforcement schedule. In fact, Skinner was so confident in his knowledge of gambling addiction that he even claimed he could turn a pigeon into a pathological gambler (“Skinner’s Utopia,” 1971). Beyond the power of variable ratio reinforcement, gambling seems to work on the brain in the same way as some addictive drugs. The Illinois Institute for Addiction Recovery (n.d.) reports evidence suggesting that pathological gambling is an addiction similar to a chemical addiction (Figure 2). Specifically, gambling may activate the reward centers of the brain, much like cocaine does. Research has shown that some pathological gamblers have lower levels of the neurotransmitter (brain chemical) known as norepinephrine than do normal gamblers (Roy, et al., 1988). According to a study conducted by Alec Roy and colleagues, norepinephrine is secreted when a person feels stress, arousal, or thrill; pathological gamblers use gambling to increase their levels of this neurotransmitter. Another researcher, neuroscientist Hans Breiter, has done extensive research on gambling and its effects on the brain. Breiter (as cited in Franzen, 2001) reports that “Monetary reward in a gambling-like experiment produces brain activation very similar to that observed in a cocaine addict receiving an infusion of cocaine” (para. 1). Deficiencies in serotonin (another neurotransmitter) might also contribute to compulsive behavior, including a gambling addiction.

It may be that pathological gamblers’ brains are different than those of other people, and perhaps this difference may somehow have led to their gambling addiction, as these studies seem to suggest. However, it is very difficult to ascertain the cause because it is impossible to conduct a true experiment (it would be unethical to try to turn randomly assigned participants into problem gamblers). Therefore, it may be that causation actually moves in the opposite direction—perhaps the act of gambling somehow changes neurotransmitter levels in some gamblers’ brains. It also is possible that some overlooked factor, or confounding variable, played a role in both the gambling addiction and the differences in brain chemistry.

Glossary

According To Operant Conditioning What Is A Slot Machine An Example Offering

Operant conditioning is a learning process whereby deliberate behaviors are reinforced through consequences. It differs from classical conditioning, also called respondent or Pavlovian conditioning, in which involuntary behaviors are triggered by external stimuli.

With classical conditioning, a dog that has learned the sound of a bell precedes the arrival of food may begin to salivate at the sound of a bell, even if no food arrives. By contrast, a dog might learn that, by sitting and staying, it will earn a treat. If the dog then gets better at sitting and staying in order to receive the treat, then this is an example of operant conditioning.

Operant Conditioning and Timing

The core concept of operant conditioning is simple: when a certain deliberate behavior is reinforced, that behavior will become more common. Psychology divides reinforcement into four main categories:

- Positive reinforcement

- Negative reinforcement

- Punishment

- Extinction

Timing and frequency are very important in reinforcement.

- A continuous reinforcement schedule (commonly abbreviated CRF) provides reinforcement for all noted behaviors. That is, every time the behavior occurs, reinforcement is provided.

- An intermittent reinforcement schedule (commonly abbreviated INT) reinforces some target behaviors but never all of them. Think of it like a slot machine. You won't win on every pull of the lever, but you do win sometimes, and that reinforces the behavior of pulling the lever.

Examples of Positive Reinforcement

According To Operant Conditioning What Is A Slot Machine An Example Often

Positive reinforcement describes the best known examples of operant conditioning: receiving a reward for acting in a certain way.

- Many people train their pets with positive reinforcement. Praising a pet or providing a treat when they obey instructions -- like being told to sit or heel -- both helps the pet understand what is desired and encourages it to obey future commands.

- When a child receives praise for performing a chore without complaint, like cleaning their room, they are more likely to continue to perform that chore in the future.

- When a worker is rewarded with a performance bonus for exceptional sales figures, she is inclined to continue performing at a high level in hopes of receiving another bonus in the future.

Examples of Negative Reinforcement

According To Operant Conditioning What Is A Slot Machine An Example Of One

Negative reinforcement is a different but equally straightforward form of operant conditioning. Negative reinforcement rewards a behavior by removing an unpleasant stimulus, rather than adding a pleasant one.

- An employer offering an employee a day off is an example of negative reinforcement. Rather than giving a tangible reward, they reduce the presence of something undesirable; that is, the amount of time spent at work.

- In a sense, young children condition their parents through negative reinforcement. Screaming, tantrums and other 'acting out' behaviors are generally intended to draw a parent's attention. When the parent behaves as the child wants, the unpleasant condition - the screaming and crying - stops. That's negative reinforcement.

- Negative reinforcement is common in the justice system. Prisons will sometimes ease regulations on a well-behaved prisoner, and sentences are sometimes shortened for good behavior. The latter in particular is classic negative reinforcement: the removal of something undesirable (days in prison) in response to a given behavior.

Examples of Punishment

In psychology, punishment doesn't necessarily mean what it means in casual usage. Psychology defines punishment as something done after a given deliberate action that lowers the chance of that action taking place in the future. Whereas reinforcement is meant to encourage a certain behavior, punishment is meant to discourage a certain behavior.

- An employee who misses work may suffer a cut in wages. The loss of income (an undesired consequence) constitutes the punishment for missing work (an undesired behavior).

- A sharp 'No!' addressed to a pet engaging in unacceptable behavior is a classic example of punishment. The shout punishes the pet, conditioning it to avoid doing wrong behavior in the future.

- Punishments are commonly used in lab experiments. Most often, a lab animal is punished for a given behavior with a mild electric shock.

Just as there are examples of positive reinforcement and negative reinforcement, there are also examples of positive punishment (like the ones above) and negative punishment. With the latter, a positive situation is removed when an undesired behavior is performed. For example, a parent may take a favorite toy away from a child who is misbehaving.

Examples of Extinction

Psychology defines extinction as the loss of conditioning over time when the conditioning stimuli are no longer present. Over time, an animal (or person) will become less conditioned unless the stimuli that conditioned them in the first place is reapplied.

- An employee punished once for missing work, then never again, may become more likely to miss work later on because they no longer expect to be punished for absence.

- Animals often test the limits of their conditioning. For instance, a cat punished with a spray bottle every time it climbs on a counter may come near the counter, or jump on the counter when it believes no one is around. If no punishment occurs, the cat is likely to keep jumping on the counter because the conditioning against it is extinct.

- In school, if a student receives a gold star for an excellent test score, but does not receive more gold stars in subsequent tests, he may become increasingly unmotivated to perform well in future tests. The operant conditioning of the positive behavior (doing well on a test) is becoming extinct.

B.F. Skinner and Conditioning

According To Operant Conditioning What Is A Slot Machine An Example Offer

Burrhus Frederic Skinner was a psychologist and researcher credited with establishing the principles of operant conditioning. B.F. Skinner began with Thorndike's law of effect, which states that behaviors that cause satisfactory results will be repeated. Skinner considered satisfaction to be insufficiently specific to measure, and set out to design a means of measuring learned behaviors.

The operant conditioning chamber, popularly known as a Skinner box, was his solution. He kept his test subjects, primarily pigeons and rats, in circumstances that allowed him to closely observe their behavior. He would isolate the animal and every time the animal performed a defined behavior, like pushing a lever, it'd be rewarded with food. When the animal began to reliably push the lever, he'd know it had been conditioned.

Skinner's work took that first principle and applied it to human behavior, representing the school of psychology called behaviorism. Behaviorism defined much of psychology for the second half of the 20th century, but is currently being combined with other psychological perspectives.

Operant Conditioning and You

It can be uncomfortable to talk about human behavior in the clinical language of psychology. That said, operant conditioning describes a simple phenomenon that happens in every part of life. It's just one of the mechanisms by which people learn. It's vital to understand how that mechanism works to make sure it works best for you.

For more on the science behind conditioning, check out our article on Examples of Behaviorism. It's the school of psychology that focuses on observable behavior, rather than emotions or motives, to explain how and why people do the things they do.